Multi-Arch Images

Multi-Arch Container Images

Normally when building containers the target hardware is not even a consideration, a dockerfile is built and the images are pushed to a registry somewhere. The reality is all of these images are specific to a platform consisting of both an OS and an architecture. Even in the V1 Image specification both of these fields are defined. With this specification you are able to at least know if the image would run on a target, but each reference was specific to an image for a specific platform, so you might have busybox:1-arm64 and busybox:1-ppc64le. This is not ideal if you want to have a single canonical reference that is platform agnostic.

In 2016 Version 2 Schema 2 Image Manifest specification was released giving us Image Manifest Lists allowing to specify a group of manifests for all of the supported architectures. With this the client could pull busybox:latest and immediately know which image to pull for a given platform. Now all of your users whether they are running Linux on aarch64, Windows on x86_64, or z/Linux on an IBM mainframe could all expect to find your release in one place.

Using the docker manifest command we can see what this looks like for the alpine:latest image on docker hub.

❯ docker manifest inspect alpine:latest

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.list.v2+json",

"manifests": [

{

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"size": 528,

"digest": "sha256:e4355b66995c96b4b468159fc5c7e3540fcef961189ca13fee877798649f531a",

"platform": {

"architecture": "amd64",

"os": "linux"

}

},

{

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"size": 528,

"digest": "sha256:29a82d50bdb8dd7814009852c1773fb9bb300d2f655bd1cd9e764e7bb1412be3",

"platform": {

"architecture": "arm",

"os": "linux",

"variant": "v6"

}

}

]

}At this point if you just want to be building these images, but really do not want to get into the details about how this all works, skip to the last section on buildx. For the rest of you, I hope you enjoy our short journey.

Assembling the Manifest List

Now that we have a way to represent these images how are these manifest lists assembled? The through the docker cli we can create and push using the same manifest utility we used to inspect.

❯ docker manifest create MANIFEST_LIST MANIFEST [MANIFEST...]

❯ docker manifest push [OPTIONS] MANIFEST_LIST

It is important to call out here that these commands only push the manifest list and not the references, so the container registry must already have the layers. It actively works with the registry and not local images, so if the references do not exist in the registry it will not allow them to be used.

If images are already being built for multiple architectures this is a simple addition to the CI process that greatly simplifies the distribution of images. What this has not solved is the generation of the images themselves. In some cases it might be possible to run docker build jobs directly on all of the architectures you support, but in many cases this is not feasible.

Building the Images

One way to build these images is to cross compile to generate the binary artifacts and then add those artifacts to and existing base image for the architecture, this process is hardly clean, and unless performance is a serious concern I would suggest an alternative. What if we used emulation to drive the build process in the container?

Usually when people think of emulation they think of emulating a full VM using something like QEMU which can be very heavy, especially if we are just compiling a small C library. This mode of operation is system emulation where everything from the hardware to user space is full emulated. There is another mode of operation for QEMU called user-mode emulation where the system calls from the emulated user-mode are captured and passed to the host kernel.

To play with the concept a little, let’s take this c program. This will print the architecture it was compiled for.

#include <stdio.h>

#ifndef ARCH

#if defined(__x86_64__)

#define ARCH "x86_64"

#elif defined(__aarch64__)

#define ARCH "aarch64"

#elif defined(__arm__)

#define ARCH "arm"

#else

#define ARCH "Unknown"

#endif

#endif

int main() {

printf("Hello, looks like I am running on %s\n", ARCH);

}Let’s compile this application for both x86-64 and Arm, note that for Arm we are doing a static build.

❯ gcc hello.c -o hello-x86_64

❯ arm-linux-gnu-gcc hello.c -o hello-arm --static

Now execute them, in the case of Arm we are able to use the QEMU user-mode emulation to intercept the write system call and have the kernel process it rather than emulating all the way down to the serial driver.

❯ ./hello-x86_64

Hello, looks like I am running on x86_64

❯ ./hello-arm

bash: ./hello-arm: cannot execute binary file: Exec format error

❯ qemu-arm hello-arm

Hello, looks like I am running on arm

What would be really useful is if we could automatically call qemu-arm when we try to execute hello-arm. This is where a Linux Kernel feature comes a long that I had not been exposed to before binfmt_misc. To quote the Linux docs:

This Kernel feature allows you to invoke almost (for restrictions see below) every program by simply typing its name in the shell. This includes for example compiled Java(TM), Python or Emacs programs.

The powerful things here is we can have the kernel identify when an ELF file is being executed for a specific architecture that is not native and then pass that to an “interpreter” in this case that could by qemu-arm.

The registration looks like this:

❯ echo ':qemu-arm:M:0:\x7f\x45\x4c\x46\x01\x01\x01\x00\x00\x00\x00\x00\x00\x00\x00\x00\x02\x00\x28\x00:\xff\xff\xff\xff\xff\xff\xff\x00\xff\xff\xff\xff\xff\xff\xff\xff\xfe\xff\xff\xff:/usr/bin/qemu-arm:CF' > /proc/sys/fs/binfmt_misc/register

❯ cat /proc/sys/fs/binfmt_misc/qemu-arm

enabled

interpreter /usr/bin/qemu-arm

flags: OCF

offset 0

magic 7f454c4601010100000000000000000002002800

mask ffffffffffffff00fffffffffffffffffeffffff

Now we can just run the Arm version of the program without explicitly calling QEMU.

❯ ./hello-arm

Hello, looks like I am running on arm

What this has really done it set us up to be able to run a container with an Arm image without the container having to be aware that any emulation is taking place. We can actually do this by forcing docker to run the Arm alpine container from the manifest list at the beginning of the article:

docker run --rm alpine:latest@sha256:29a82d50bdb8dd7814009852c1773fb9bb300d2f655bd1cd9e764e7bb1412be3 uname -a

Linux 4003fb15be7a 5.3.7-200.fc30.x86_64 #1 SMP Fri Oct 18 20:13:59 UTC 2019 armv7l Linux

The fact that we were able to run an Arm docker container on our x86_64 hardware really unlocks the original goal which was to be able to create cross platform images. Let’s take a simple dockerfile for building our c program. Note that we are calling out the digest for the Arm alpine images.

FROM alpine:latest@sha256:29a82d50bdb8dd7814009852c1773fb9bb300d2f655bd1cd9e764e7bb1412be3 AS builder

RUN apk add build-base

WORKDIR /home

COPY hello.c .

RUN gcc hello.c -o hello

FROM alpine:latest@sha256:29a82d50bdb8dd7814009852c1773fb9bb300d2f655bd1cd9e764e7bb1412be3

WORKDIR /home

COPY --from=builder /home/hello .

ENTRYPOINT ["./hello"]❯ docker build -t hello-arm .

<...>

Successfully built 77154bf3d6c9

Successfully tagged hello-arm:latest

❯ docker run --rm hello-arm

Hello, looks like I am running on arm

It is worth pointing out here that the docker cli, if experimental features are turned on, provides the --platform flag which saves you from having to specify the digest for the Arm image. It is important to note though that this only impacts the pull, so if you have alpine:latest already for Arm and you switch platform flags to x86_64, you risk using the wrong image. This can be worked around by forcing the pull with --pull.

Putting it Together

Using this and the image manifest command we can now build everything we need.

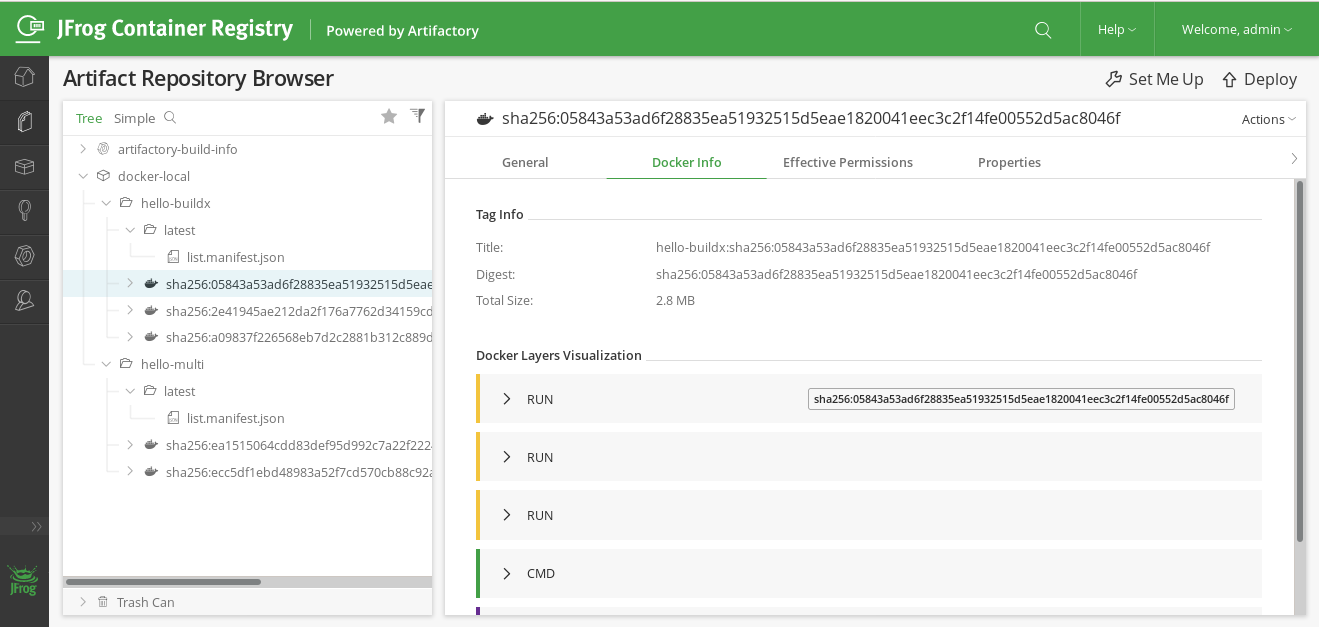

This will require using a docker registry, it is very simple to spin up the JFrog Container Registry locally which is what I will be using for the rest of this. Make sure you have created a docker repository called docker-local and have authenticated with it.

The whole flow now looks like this:

❯ docker build --platform linux/amd64 -t localhost:8081/docker-local/hello-amd64 --pull .

❯ docker build --platform linux/arm/v7 -t localhost:8081/docker-local/hello-armv7 --pull .

❯ docker push localhost:8081/docker-local/hello-amd64

❯ docker push localhost:8081/docker-local/hello-armv7

❯ docker manifest create --insecure \

localhost:8081/docker-local/hello-multi:latest \

localhost:8081/docker-local/hello-amd64 \

localhost:8081/docker-local/hello-armv7

❯ docker manifest push localhost:8081/docker-local/hello-multi:latest

Using the manifest inspect we can now see the manifest list as we did with alpine from Docker Hub

❯ docker manifest inspect --insecure localhost:8081/docker-local/hello-multi:latest

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.list.v2+json",

"manifests": [

{

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"size": 736,

"digest": "sha256:ea1515064cdd83def95d992c7a22f22240930db16f656e0c40213e05a3a650e9",

"platform": {

"architecture": "amd64",

"os": "linux"

}

},

{

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"size": 736,

"digest": "sha256:ecc5df1ebd48983a52f7cd570cb88c92ada135718a4cc2e0730259d6af052599",

"platform": {

"architecture": "arm",

"os": "linux"

}

}

]

}

A Simpler Path with buildx

If that all seemed like a lot of extra bits to wire together just to build for two architectures and might break if you look at it wrong, you are not wrong. Also doing this on Windows or macOS is likely going to require running this all in a virtual machine. This is why there is a Docker CLI plugin that leverages BuildKit to make this all so much easier. The cool thing is under the hood it is basically doing all of the same things. It also provides helpful tools for including native workers in cases where you might have them to improve performance over QEMU.

There are more details at https://github.com/docker/buildx, but the short of it is if you are using Docker CE, make sure you are running edge and you have the experimental flags turned on. If you are using Docker Desktop you do not have to worry about including qemu-user for Arm as that is already handled, but if you are on Linux you will want to use your distributions package for this or register the qemu binaries using this docker iamge:

docker run --rm --privileged docker/binfmt:66f9012c56a8316f9244ffd7622d7c21c1f6f28d

If you use the docker command, the settings in to /proc/sys/fs/binfmt_misc are lost on reboot, so make sure they are registered before trying any builds.

❯ ls /proc/sys/fs/binfmt_misc

qemu-aarch64 qemu-arm qemu-ppc64le qemu-s390x register status

At this point you should see a new docker command docker buildx.

❯ docker buildx

Usage: docker buildx COMMAND

Build with BuildKit

Management Commands:

imagetools Commands to work on images in registry

Commands:

bake Build from a file

build Start a build

create Create a new builder instance

inspect Inspect current builder instance

ls List builder instances

rm Remove a builder instance

stop Stop builder instance

use Set the current builder instance

version Show buildx version information

We first need to register a new builder and then inspect what we can build with it.

❯ docker buildx create --use

strange_williamson

❯ docker buildx inspect --bootstrap

[+] Building 2.2s (1/1) FINISHED

=> [internal] booting buildkit 2.2s

=> => pulling image moby/buildkit:buildx-stable-1 1.4s

=> => creating container buildx_buildkit_strange_williamson0 0.7s

Name: strange_williamson

Driver: docker-container

Nodes:

Name: strange_williamson0

Endpoint: unix:///var/run/docker.sock

Status: running

Platforms: linux/amd64, linux/arm64, linux/ppc64le, linux/s390x, linux/386, linux/arm/v7, linux/arm/v6

We can use buildx in mostly the same way that we used it before to build our images, the difference being this time we supply all of the platforms that we are targeting and it will be using BuildKit.

❯ docker buildx build --platform linux/amd64,linux/arm64,linux/ppc64le .

WARN[0000] No output specified for docker-container driver. Build result will only remain in the build cache. To push result image into registry use --push or to load image into docker use --load

[+] Building 15.3s (28/28) FINISHED

=> [internal] booting buildkit 0.2s

=> => starting container buildx_buildkit_strange_williamson0 0.2s

=> [internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [internal] load build definition from Dockerfile 0.0s

=> => transferring dockerfile: 91B 0.0s

=> [linux/amd64 internal] load metadata for docker.io/library/alpine:latest 2.4s

=> [linux/ppc64le internal] load metadata for docker.io/library/alpine:latest 2.4s

=> [linux/arm64 internal] load metadata for docker.io/library/alpine:latest 2.4s

=> [internal] load build context 0.0s

=> => transferring context: 384B 0.0s

=> [linux/arm64 builder 1/5] FROM docker.io/library/alpine@sha256:c19173c5ada6 0.7s

=> => resolve docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d28a 0.0s

=> => sha256:8bfa913040406727f36faa9b69d0b96e071b13792a83ad69c 2.72MB / 2.72MB 0.4s

=> => sha256:61ebf0b9b18f3d296e53e536deec7714410b7ea47e4d0ae3c 1.51kB / 1.51kB 0.0s

=> => sha256:c19173c5ada610a5989151111163d28a67368362762534d8a 1.64kB / 1.64kB 0.0s

=> => sha256:1827be57ca85c28287d18349bbfdb3870419692656cb67c4cd0f5 528B / 528B 0.0s

=> => unpacking docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d2 0.1s

=> [linux/amd64 builder 1/5] FROM docker.io/library/alpine@sha256:c19173c5ada6 0.9s

=> => resolve docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d28a 0.0s

=> => sha256:965ea09ff2ebd2b9eeec88cd822ce156f6674c7e99be082c7 1.51kB / 1.51kB 0.0s

=> => sha256:c19173c5ada610a5989151111163d28a67368362762534d8a 1.64kB / 1.64kB 0.0s

=> => sha256:e4355b66995c96b4b468159fc5c7e3540fcef961189ca13fee877 528B / 528B 0.0s

=> => sha256:89d9c30c1d48bac627e5c6cb0d1ed1eec28e7dbdfbcc04712 2.79MB / 2.79MB 0.5s

=> => unpacking docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d2 0.2s

=> [linux/ppc64le builder 1/5] FROM docker.io/library/alpine@sha256:c19173c5ad 0.7s

=> => resolve docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d28a 0.0s

=> => sha256:cd18d16ea896a0f0eb99be52a9722ffae9a5ac35cf28cb8b9 2.81MB / 2.81MB 0.4s

=> => sha256:803924e7a6c178a7b4c466cf6a70c9463e9192ef439063e6f 1.51kB / 1.51kB 0.0s

=> => sha256:c19173c5ada610a5989151111163d28a67368362762534d8a 1.64kB / 1.64kB 0.0s

=> => sha256:6dff84dbd39db7cb0fc928291e220b3cff846e59334fd66f27ace 528B / 528B 0.0s

=> => unpacking docker.io/library/alpine@sha256:c19173c5ada610a5989151111163d2 0.1s

=> [linux/arm64 stage-1 2/3] WORKDIR /home 0.1s

=> [linux/arm64 builder 2/5] RUN apk add build-base 6.5s

=> [linux/ppc64le stage-1 2/3] WORKDIR /home 0.1s

=> [linux/ppc64le builder 2/5] RUN apk add build-base 9.0s

=> [linux/amd64 builder 2/5] RUN apk add build-base 6.4s

=> [linux/amd64 stage-1 2/3] WORKDIR /home 0.0s

=> [linux/amd64 builder 3/5] WORKDIR /home 0.0s

=> [linux/arm64 builder 3/5] WORKDIR /home 0.1s

=> [linux/amd64 builder 4/5] COPY hello.c . 0.0s

=> [linux/arm64 builder 4/5] COPY hello.c . 0.0s

=> [linux/amd64 builder 5/5] RUN gcc hello.c -o hello 0.1s

=> [linux/arm64 builder 5/5] RUN gcc hello.c -o hello 2.6s

=> [linux/amd64 stage-1 3/3] COPY --from=builder /home/hello . 0.0s

=> [linux/ppc64le builder 3/5] WORKDIR /home 0.7s

=> [linux/arm64 stage-1 3/3] COPY --from=builder /home/hello . 0.7s

=> [linux/ppc64le builder 4/5] COPY hello.c . 0.5s

=> [linux/ppc64le builder 5/5] RUN gcc hello.c -o hello 1.0s

=> [linux/ppc64le stage-1 3/3] COPY --from=builder /home/hello . 0.4s

Since we are using a docker-container to do the building, the images by default will be isolated from the usual image storage. We are warned of this and need to provide a way to export them. One way of doing this is to have it export the oci-archive of the build, there are issues exporting multi-arch images to the docker daemon currently (docker target only supports v1s2 specification), which leaves the OCI target a reasonable choice for local inspection.

docker buildx build --platform linux/amd64,linux/arm64,linux/ppc64le -o type=oci,dest=- . > myimage-oci.tar

One very convenient tool for inspecting container images and image repositories is skopeo. Make sure you are using a latest version of this as there have been recent changes in v0.1.40 around better supporting OCI as well as the --all parameter to copy which is needed for manifest lists. Using it we can convert the oci-archive to a docker archive and then inspect it to see the three images listed in the Image List Manifest as we would expect.

❯ skopeo copy oci-archive:../myimage-oci.tar docker-archive:../myimage-docker.tar -f v2s2

Getting image source signatures

Copying blob 89d9c30c1d48 done

Copying blob e9edb727823b done

Copying blob 9fbf10e0f163 done

Copying config 2c3d27a535 done

Writing manifest to image destination

Storing signatures

❯ skopeo inspect docker-archive:../myimage-docker.tar --raw | python3 -m json.tool

{

"schemaVersion": 2,

"mediaType": "application/vnd.docker.distribution.manifest.v2+json",

"config": {

"mediaType": "application/vnd.docker.container.image.v1+json",

"size": 1168,

"digest": "sha256:2c3d27a535333188b64165c72740387e62f79f836c2407e8f8d2b679b165b2b9"

},

"layers": [

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"size": 5814784,

"digest": "sha256:77cae8ab23bf486355d1b3191259705374f4a11d483b24964d2f729dd8c076a0"

},

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"size": 3072,

"digest": "sha256:11d5c669f7975adad168faa01b6c54aeea9622c9fcb294396431ee1dd73d012f"

},

{

"mediaType": "application/vnd.docker.image.rootfs.diff.tar.gzip",

"size": 24064,

"digest": "sha256:5f985e16416a55b548f80ebd937b3f4f1e77b2967ab0577e1eb0b4c07a408343"

}

]

}We can also use skopeo to upload the images and the image manifest to our JFrog Container Registry.

❯ skopeo copy --dest-tls-verify=false --dest-creds=user:pass \

oci-archive:myimage-oci.tar \

docker://localhost:8081/docker-local/hello-buildx \

-f v2s2 --all

Getting image list signatures

Copying 3 of 3 images in list

Copying image sha256:75f9363c559cb785c748e6ce22d9e371e1a71de776970a00a9b550386c73f76e (1/3)

Getting image source signatures

Copying blob 89d9c30c1d48 skipped: already exists

Copying blob e9edb727823b skipped: already exists

Copying blob 9fbf10e0f163 skipped: already exists

Copying config 2c3d27a535 done

Writing manifest to image destination

Storing signatures

Copying image sha256:9bd94cb2701dead0366850493f9220d74e3d271ee64d5ce9ddc40747d8d15004 (2/3)

Getting image source signatures

Copying blob 8bfa91304040 skipped: already exists

Copying blob 7dff5f8d776f skipped: already exists

Copying blob d53560c61a67 done

Copying config 551e2ac7ec done

Writing manifest to image destination

Storing signatures

Copying image sha256:6db849e4705f7f10621841084f9ac34e295e841521d264567cd2dcba9094c4d8 (3/3)

Getting image source signatures

Copying blob cd18d16ea896 skipped: already exists

Copying blob 0b02afc5b3b5 skipped: already exists

Copying blob 999706aeff73 done

Copying config b4592c67c0 done

Writing manifest to image destination

Storing signatures

Writing manifest list to image destination

Storing list signatures

Image Distribution

The multi-arch images that we generated using both the manual flow of composing and the buildx managed flow are accessible through the registry as any container user would expect. The only difference is we have now enabled users from the IoT and enterprise spaces to consume our application without having to worry about the hardware they are running on. I hope that you will consider leveraging some of these methods to distribute your software to a broader audience.